The only nonzero singular value is the product of the normalizing factors. These vectors provide bases for the one dimensional column and row spaces. The first left and right singular vectors are our starting vectors, normalized to have unit length. The matrix $A$ is their outer product A = u*v' Here is an example involving lines in two dimensions. So the columns of $V$, which are known as the right singular vectors, form a natural basis for the first two fundamental spaces. This says that $A$ maps the first $r$ columns of $V$ onto nonzero vectors and maps the remaining columns of $V$ onto zero. Write out this equation column by column. The only nonzero elements of $\Sigma$, the singular values, are the blue dots. I've drawn a green line after column $r$ to show the rank. Multiply both sides of $A = U\Sigma V^T $ on the right by $V$. So the function r = rank(A)Ĭounts the number of singular values larger than a tolerance. With inexact floating point computation, it is appropriate to take the rank to be the number of nonnegligible diagonal elements. In MATLAB, the SVD is computed by the statement. The signs and the ordering of the columns in $U$ and $V$ can always be taken so that the singular values are nonnegative and arranged in decreasing order.įor any diagonal matrix like $\Sigma$, it is clear that the rank, which is the number of independent rows or columns, is just the number of nonzero diagonal elements. All of the other elements of $\Sigma$ are zero. The diagonal elements of $\Sigma$ are the singular values, shown as blue dots. Here is a picture of this equation when $A$ is tall and skinny, so $m > n$. The matrix $A$ is rectangular, say with $m$ rows and $n$ columns $U$ is square, with the same number of rows as $A$ $V$ is also square, with the same number of columns as $A$ and $\Sigma$ is the same size as $A$. The shape and size of these matrices are important. The matrix $\Sigma$ is diagonal, so its only nonzero elements are on the main diagonal. The matrices $U$ and $V$ are orthogonal, which you can think of as multidimensional generalizations of two dimensional rotations. The natural bases for the four fundamental subspaces are provided by the SVD, the Singular Value Decomposition, of $A$. The rank of a matrix is this number of linearly independent rows or columns. This may seem obvious, but it is actually a subtle fact that requires proof. In other words, the number of linearly independent rows is equal to the number of linearly independent columns. The dimension of the row space is equal to the dimension of the column space.Remember that it is conventional to assign the degree to the polynomial, so we have, and everything makes sense. Using the conventional polynomial sum and product by elements of, it is easy to check that this is a vector subspace. Ĭonsider the set of polynomials in with degree smaller or equal to : This proves that, and therefore that is a vector subspace of. This set is nonempty, and using the properties of the transposition we have that, given and : Then is the set of symmetric matrices of. Where is the set of by matrices with entries in, and denotes the transpose of the matrix, that is, the matrix obtained changing rows by columns. We may think of as the vector space inside.

Similarly, if we multiply by any scalar a vector with a zero third component, we get a vector with a zero third component. Indeed, if we add two elements of with a zero third component, we obtain also an element with a zero third component. However, if we think of as a vector space over, is not a vector subspace, since it is not closed under scalar multiplication. If we think of as a -vector space, then is a vector subspace.The set consisting of the zero vector only is also a (trivial) vector subspace. Then is itself a (trivial) vector subspace.

Also if we set, then for all and, , proving that is also closed under scalar multiplication. Conversely, if the condition holds, set, and then given we have that. If is a vector subspace, the condition clearly holds by definition. Then is a vector subspace if and only if for all and all, we have that. Proposition Let be a -vector space and let be a nonempty subset. The following proposition gives an easy characterization of vector subspaces. That is to say, a vector subspace of is nothing but a subset of that is also a vector space, under the same vector addition and scalar multiplication.

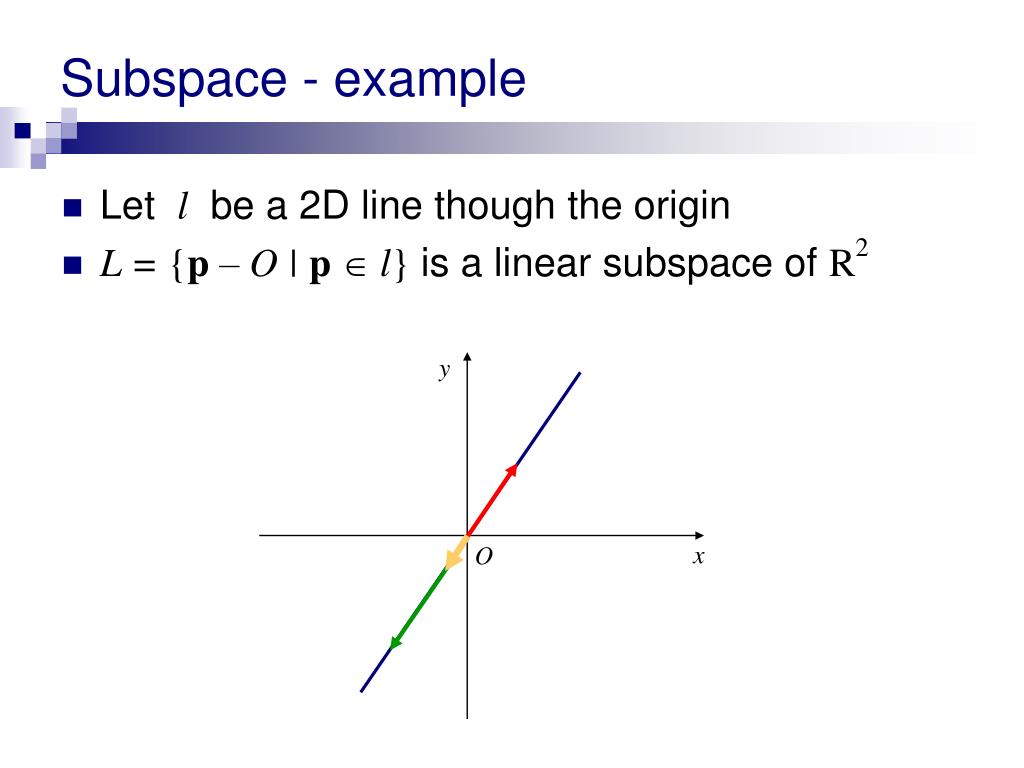

A nonempty subset is said to be a vector subspace of if it is closed under the vector sum (that is, whenever we have ) and under the scalar multiplication (that is, whenever and we have. Throughout this and the incoming lessons, will always denote a field.ĭefinition Let be a -vector space. To do so, we need the following definition.

Subspace definition linear algebra how to#

In this lesson we are going to see how to build vector spaces from previously-known ones.

0 kommentar(er)

0 kommentar(er)